Opera is Bringing Local LLMs to Opera One

- Paul Thurrott

- Apr 03, 2024

-

0

Opera has been leaning heavily into AI as a competitive advantage for its alternative web browser. It was the first browser maker to add native AI capabilities to its app. And now it’s taking the next logical step.

“Opera is adding experimental support for 150 local LLM (Large Language Model) variants from approximately 50 families of models to its Opera One browser in developer stream,” Opera announced today. “This step marks the first time local LLMs can be easily accessed and managed from a major browser through a built-in feature. The local AI models are a complimentary addition to Opera’s online Aria AI service.”

Windows Intelligence In Your Inbox

Sign up for our new free newsletter to get three time-saving tips each Friday — and get free copies of Paul Thurrott's Windows 11 and Windows 10 Field Guides (normally $9.99) as a special welcome gift!

"*" indicates required fields

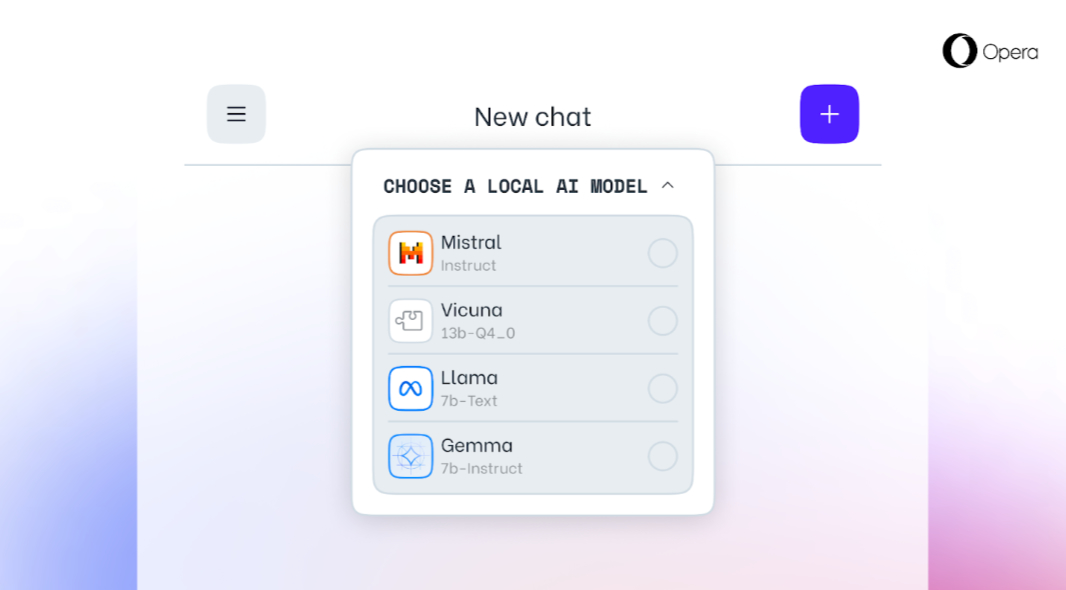

Opera One will support locally installed LLMs like Google Gemma, Meta Llama, Mistral AI Mixtral, and Vicuna, ensuring that users’ data is stored only locally on their own devices. This frees them to use generative AI (GenAI) functionality without copying that data to the cloud, where it might be used to train AIs or repurposed.

This new feature is available via the recently announced Opera One AI feature drop system, by which customers can opt in to early access to new AI advances. To test these models, you need to install the latest Opera Developer build and then follow the company’s instructions for activating this experimental new feature.